Therefore, it is important to be knowledgeable about the potential challenges as well as take steps to conquer them. To make the most of global digitalization, organizations have accessibility to Big Data that they can utilize to drive business development, study, and advancement. The FortiWeb internet application firewall program comes with predetermined guidelines that can recognize hazardous internet scrapes.

- As requirements differ with companies, Grepsr has given top quality custom internet scratching options for firms of various shapes and sizes.

- Called get in touch with scratching, this automates the process of discovering the best get in touch with information for a marketing lead.

- At the same time, like any other arising market, internet scratching brings lawful issues also.

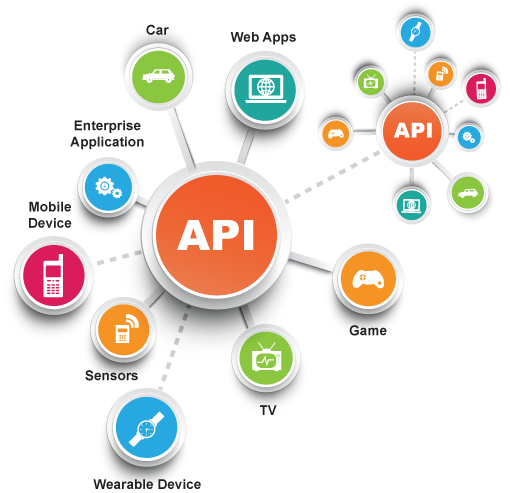

This information can be utilized to optimize electronic marketing campaigns as well as improve brand name recognition. In e-market research-- to gather information on competitors' products, prices methods, and also advertising campaigns. This data can be made use of to obtain understandings into the market and make data-driven decisions. The very first approach that comes to mind is that information can be gathered by hand by employing people to track details on the sources of rate of interest. However the productivity of such job will be reduced, as well as the possibility of mistake due to the human factor will certainly be high.

Services

Organizations must acquire permission or have a genuine rate of interest in the data they are gathering and ensure that the removed data is being made use of morally as well as properly. Being transparent regarding using web scuffing devices as well as the data being gathered is necessary. Organizations should interact the function of the data collection as well as https://sgp1.vultrobjects.com/ETL-Processes/Web-Scraping-Services/custom-etl-services/3-advantages-of-using-web-scuffing-as-a-service-in18308.html get consent from the individuals entailed. Additionally, with FortiGuard internet filtering services, your system can be safeguarded from a wide range of web-based attacks, including those created to penetrate your site with scraper malware. With FortiGuard, you obtain granular filtering system and obstructing abilities, and FortiGuard immediately updates its devices on a regular basis making use of the latest danger intelligence. You can likewise pick whether updates are instantly pressed to your system or you pull them when and also exactly how it's hassle-free for you.

Staying Under the Radar: How Residential Proxies Can Protect Your ... - Cyber Kendra

Staying Under the Radar: How Residential Proxies Can Protect Your ....

Posted: Tue, 22 Aug 2023 13:46:20 GMT [source]

They utilize a. flurry of formats for distribution can be CSV, JSON, JSONLines, or XML. So you've aesthetically inspected the web site you wish to scrape, identified the aspects you'll need, as well as run your manuscript. The trouble is that scrapes can only extract information from what they can find in the HTML data, and not dynamically infused material.

Internet Scratching Software Program

Richard brings over 20 years of web site growth, SEO, as well as advertising to the table. A grad in Computer Science, Richard has actually lectured in Java shows and has constructed software for companies including Samsung and ASDA. Currently, he creates for TechRadar, Tom's Overview, computer Gamer, as well as Innovative Bloq.

AI companies are being sued by artists - The Week

AI companies are being sued by artists.

Posted: Wed, 26 Jul 2023 07:00:00 GMT [source]

In truth, though, the process isn't performed just when, however plenty of times. This comes with its very own swathe of troubles that need resolving. As an example, badly coded scrapes might send out way too many HTTP requests, which can crash a website. Every web site additionally has various rules for what bots can and also can't do.

Bring your data collection procedure to the following degree from $50/month + VAT. To avoid internet scratching, web site operators can take a range of different steps. The documents robots.txt is made use of to obstruct online search engine robots, for example.